menu

Mar 2006

OpenSolaris für Anwender, Administratoren und Rechenzentren

ISBN 3-540-29236-5

im Springer Verlag

Support Page

menu

Mar 2006

While the Hypervisor API had been published some time ago on the T1 web site, it wasn’t possible so far to give the implementation a test run.

Recent news however give reason to hope that the time of waiting to actually access the hypervisor and partition a T1 System into Logical Domains is nearing an end. The LDoms v1.0 software has been in Nevada since build 41 and has now been included in the GA Release Solaris 10 11/06

From the presentation by Ashley Saulsbury I take that the hypervisor is layered under the OBP layer of the individual logical domains. This would imply that it either is implemented in OBP, or running in an underlying service domain/partition of its own.

So where is the hypervisor? In firmware (OBP) or is there a seperate (minimal?) OS instance running it? Is there a service domain for the hypervisor and the virtualization layer? If yes - What happens to the domains if the service domain is rebooted or crashes? What happens if a domain gets shut down with power-off? Does it terminate the domain or the platform? (Yes, I know what I’d wish to happen, but I’ve also learned not to expect something new to stick to my expectations :)

I’d also like to test out how much overhead the hypervisor (and the individual OS instances in each domain) represents, compared to a similar zones configuration.

Assuming that each domain has an individual OBP layer, what is visible at the level of the OBP?

PCI Express is supposed to be mapped into the address space of the logical domain, so the OBP running there can detect the devices. Is a pci card thus limited to a single domain, or does it get virtualized and shared?

A bit about console access to logical domains is described in the manual page to vntsd(1M). This virtual network terminal server daemon implements access based on the telnet protocol, similar to a “virtual annex”. Where does it run? Since the slides state, that the hypervisor is “not an OS”, on every logical domain?

How is network access passed on?

Another side effect of the implementation as mapped memory buffer was, that IDN couldn’t be used for cluster heartbeat — a “mailbox” just doesn’t provide that kind of signal.

How is storage shared and represented to the individual domains? There is more than one way to do that, which route has been chosen and implemented so far?

If I suspend a logical domain, move the image to another system, and then reload the domain from the suspended image, will it live?

This would enable interesting perspectives for clustering unique to the Niagara platform.

Can cores be designated to individual domains? What happens if that very core gets deactivated from the service partition?

Is it possible to run IDN, and listen to its traffic from

Is it possible to place a domain as router or firewall between other logical domains?

I’m just imagining a T1000 running a busy beehive of domains around a honeypot installation.

Footnotes:

1. IDN, short for Inter-Domain Networking, was used in the E10k. Implemented as a special asic-configuration state (board mask) that had to be scheduled, and basically mapped a reserved memory buffer from one domain to another, it took a certain toll on the system. By design, IDN was violating the principle that domains should be separated from each other. Also it created a dependency where in certain cases a failed domain could cause all other domains within the same IDN to follow its path downhill.

So far, I couldn’t give LDoms the try I would have wished for, both the firmware images and the LDoms Manager software are not available outside Sun yet. 1 2

My trial period isn’t all over yet, but it looks like I’ll have to return this wonderful toy before the support for logical domains hits the streets.

I had asked for permission to upgrade the OBP of the Try-and-Buy machine in case I get access to the image in time. Not only was I given immediate clearance for the procedure, if all goes well, I’ll also get the image. So there’s still hope!

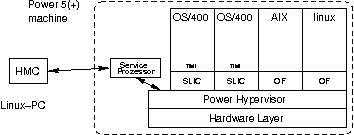

A lot of people asked me why I always speak of iSeries when comparing

UNIX machines. Well that is quite simple: for IBM’s today’s PowerPC

based machines, it’s the same hardware. For the “low-cost” entry level

systems, a System p 520 is the same hardware as a System i 520. A

System System p 550 is the same hardware as a System i 550 and so

on. A little PROM makes all the difference. Ever wondered why your

firmware upgrade on the Open power machines didn’t work? Well, a

little PROM tells the box about it’s personality and the firmware-CDROM

you had to boot from for the upgrade had a PASE environment on it,

that’s some kind of an AIX runtime environment. So booting the

firmware upgrade CDROM on the Open power box found a license violation:

It is a Linux-only box and not an AIX box.

But there is far more

to that. The IBM boxes have an abstraction layer between the hardware

and the operating system called Power hypervisor. This Power

hypervisor allows for logical partitioning and is administered by

pushbuttons on the front plate if you are only running two LPAR

partitions. If you want more, you need to buy an extra

Hardware Management Console (HMC), something related to what SSPs

were in the E10k world or the internal CP1500-cPCI sparc-board in

the SunFire 15000 or later SF25k. This HMC interfaces with an

internal nonredundant (at least for the small boxes) service processor

to allow for adminsitration of the LPAR partitions. The LPAR

partitions are logical partitions that can run different kind of

PowerPC-based operating systems, by now there is no PowerPC-Solaris

for System i (iSeries) since the Power hypervisor doesn’t like that

as in the Openpower firmware upgrade above. The major difference

between System i and System p is the price. If you only run AIX and

don’t buy additional software as databases etc., the System p

solution costs less. If you want to have database on the machine

and need more partitions and want to have a development environment

then System i becomes more cost effective. This layering in the

machine is shown in the following figure:

In this graphic, OF stands for OpenFirmware, that’s the gluecode for the hardware for AIX and PowerPC-linux. SLIC stands for System Licensed Internal Code (no it is not a bootable license manager, it is OS/400s kernel, even so it behaves somehow like a license manager:( What the Power hypervisor does, is shareing timeslices of physical CPUs as virtual CPUs and memory to the LPAR partitions. It also delivers virtual-serial (for the UNIX-consoles), virtual-scsi and virtual-ethernet. So all network communication between the LPARs is done on that hypervisor layer. Even disk-I/O. The virtual-serials are accessible from the HMC. So if you want a console for AIX and Linux, you need to buy a HMC. I do not understand why this HMC is not part of the shipment of the base machine, this is marketing stuff. Consolidation with System i (or iSeries) or System p (or pSeries) means that you can put all of your boxes in one powerful new machine. But you do not consolidate on platforms, you still need someone to administer the linux-partition, one to administer AIX-partitions and someone for OS/400, so you stay with nearly the same manpower.

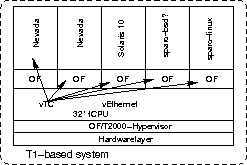

How does all that compare to a T2000? By now only zones are released. Sun developed something they call logical domains, LDoms, that allows for hardware abstraction and logical partitioning. It runs on the T2000 only, for now. A fully stuffed T2000 costs ca 16000EUR, an entry level iSeries 550, two CPUs, some 4GBRam some disks costs about 25000EUR and don’t think you have all CPU or cores up running and licensed in this config. Remember: the Power hypervisor. So, strict partitioning as defined by LDoms put logical partitioning to the T2000. From what I gleamed from the documentation accessible to me so far, the LDoms model looks a bit similar to LPAR phase II at IBM, where IBM had some kind of an OS/400 service partition that allowed you to configure LPARs.

Sun’s LDoms supply a virtual terminalserver, so you have consoles for the partitions, but I guess this comes out of the UNIX history: You don’t like flying without any sight or instruments at high speed through caves, do you? So you need a console for a partition! T2000 with LDoms seems to support this, at IBM you need to buy an HMC (Linux-PC with HMC-software). With crossbow virtual network comes to Solaris. LDoms seem to give all advantages of logical partitioning as IBMs have, but hopefully a bit faster and clearly less power consumption. Sun offers a far more open licensing of course and: You do not need a Windows-PC to administer the machine (iSeries OS/400 is administerd from such a thing). A T2000 is fast and has up to 8 cores (32 thread-CPUs) 16GBRam and has a good price and those that do not really need the pure power are more interestend in partitioning. The Solaris zones have some restrictions aka no nfs/server in zones etc. That is where LDoms come in. That’s why I want to actually compare LDoms and LPARs. It looks like it becomes cold out there for IBM boxes….

Hopefully I get hold on the missing parts of the software before the try-and-buy period is over, because I would like to show LDoms in the Solaris world next week in a tutorial and I want to explain LDoms in the OpenSolaris book as well as clustering of LDoms in the upcoming Cluster-book. Too sad that the Solaris Developer Conference I help organizing is end of february. It would be a nice place for a LDoms tutorial….